DNA identification stands at the crossroads of scientific precision and technological innovation, yet errors can emerge from both laboratory procedures and algorithmic processing, demanding careful scrutiny.

🧬 The Foundation of DNA Identification Technology

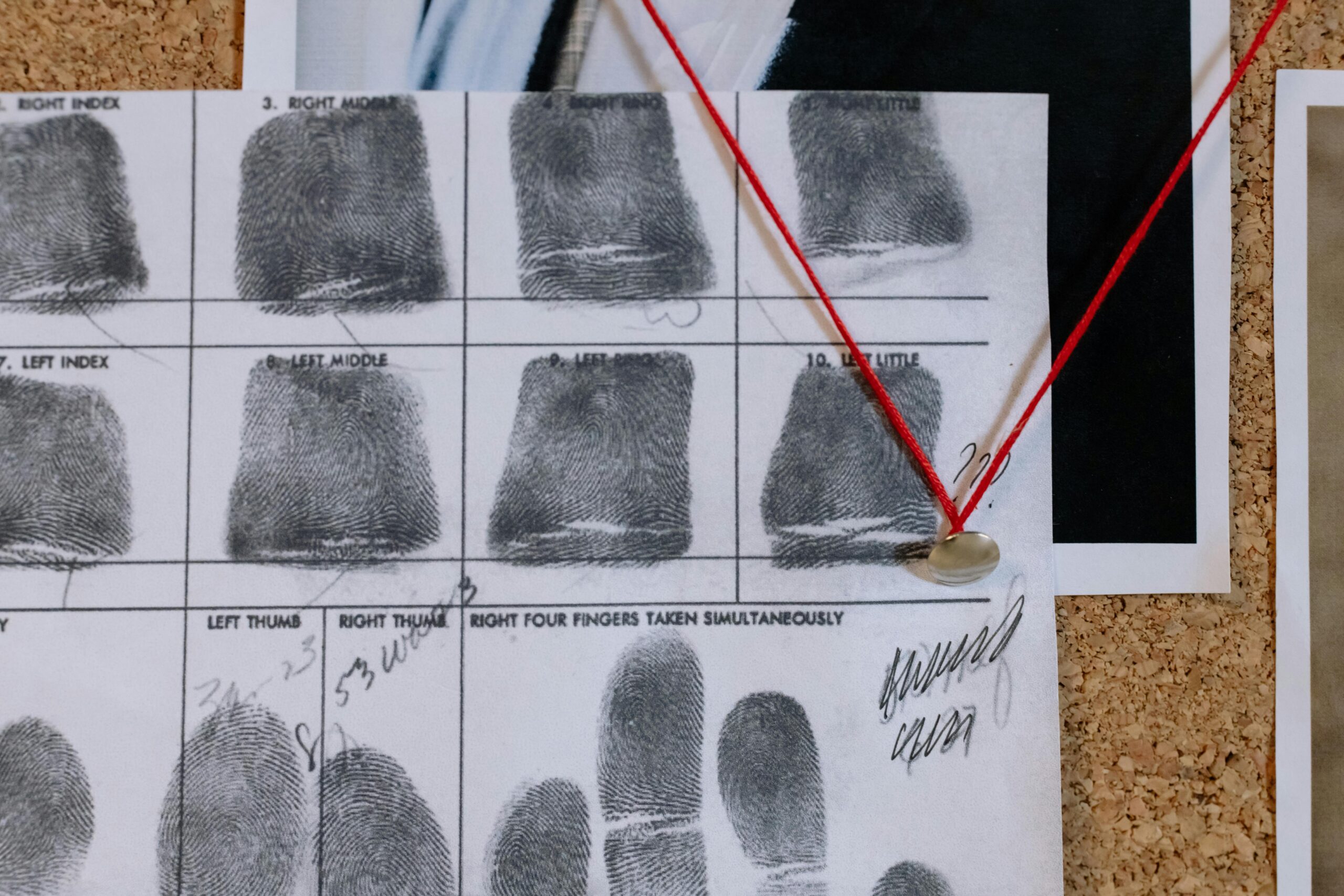

DNA identification has revolutionized forensic science, paternity testing, and criminal investigations over the past few decades. This powerful tool relies on analyzing specific regions of DNA that vary between individuals, creating unique genetic profiles that can identify or exclude suspects with remarkable accuracy. However, as with any scientific process involving human intervention and technological systems, the possibility of error exists at multiple stages.

The DNA identification process typically involves collecting biological samples, extracting DNA, amplifying specific genetic markers through polymerase chain reaction (PCR), analyzing the results using specialized equipment, and interpreting the data through sophisticated algorithms. Each of these stages presents opportunities for both laboratory errors and algorithmic miscalculations that can compromise the integrity of results.

Understanding Laboratory Errors in DNA Analysis

Laboratory errors represent the human and procedural mistakes that occur during the physical handling and processing of DNA samples. These errors can happen at any point in the chain of custody, from sample collection to final analysis, and they often stem from controllable factors within the laboratory environment.

Sample Contamination and Collection Issues

The most prevalent laboratory error involves sample contamination, which occurs when foreign DNA mixes with the evidence sample. This can happen during collection at crime scenes, during transportation, or within the laboratory itself. Even minute amounts of contaminating DNA from investigators, laboratory technicians, or environmental sources can skew results significantly.

Proper collection protocols require sterile equipment, protective gear, and meticulous documentation. When these standards slip, the integrity of the entire analysis becomes questionable. Cross-contamination between samples processed simultaneously poses another significant risk, particularly in high-volume laboratories where multiple cases undergo analysis concurrently.

Human Technical Errors During Processing

Laboratory technicians must follow precise protocols throughout DNA extraction and amplification. Small deviations in temperature, timing, reagent quantities, or handling procedures can produce unreliable results. Mislabeling samples represents another critical error category that can lead to catastrophic misidentification.

Equipment malfunction or improper calibration also falls under laboratory error. Thermal cyclers, electrophoresis machines, and sequencing equipment require regular maintenance and quality checks. When these machines operate outside specifications, they can generate inaccurate data that appears scientifically valid on the surface.

🔬 The Rise of Algorithmic Processing in DNA Analysis

Modern DNA identification increasingly relies on complex algorithms and software to interpret genetic data. These computational tools analyze the raw output from laboratory equipment, compare genetic profiles against databases, calculate statistical probabilities, and generate reports for investigators and courts.

Algorithmic processing offers tremendous advantages in speed, consistency, and the ability to handle complex mixed samples or degraded DNA. However, this technological advancement introduces a new category of errors that differ fundamentally from traditional laboratory mistakes.

How DNA Algorithms Function

DNA analysis algorithms use mathematical models to interpret peaks and patterns in genetic data. They must distinguish true alleles from background noise, artifacts, and stutter peaks that naturally occur during PCR amplification. More sophisticated algorithms employ machine learning to improve their interpretive accuracy over time.

These programs also calculate likelihood ratios and match probabilities when comparing unknown samples to reference profiles. The statistical frameworks underlying these calculations rest on assumptions about population genetics, allele frequencies, and independence of genetic markers.

Sources of Algorithmic Error

Algorithmic errors emerge from several distinct sources. Programming bugs represent the most straightforward category—actual mistakes in the code that cause incorrect calculations or data handling. While software testing aims to identify these issues, complex programs inevitably contain undiscovered bugs that may only manifest under specific circumstances.

More insidious are errors stemming from flawed underlying assumptions or inappropriate application of algorithms to unsuitable data types. An algorithm optimized for single-source samples may perform poorly when analyzing mixtures of DNA from multiple contributors. Similarly, algorithms trained on DNA profiles from one population group may produce biased results when applied to different ethnic backgrounds.

Threshold settings within algorithms also critically impact results. Setting detection thresholds too low increases false positives by identifying noise as genuine genetic signals. Conversely, thresholds set too high may miss legitimate alleles, particularly in low-template or degraded samples.

Distinguishing Between Laboratory and Algorithmic Errors

Identifying whether an error originated in the laboratory or the algorithm requires systematic investigation. This distinction matters enormously because the corrective actions differ significantly, and understanding the error source helps prevent future occurrences.

Diagnostic Indicators of Laboratory Errors

Laboratory errors often leave characteristic signatures in the data. Contamination typically introduces unexpected alleles that don’t fit expected patterns. Complete profile failures or unusually weak signals may indicate problems with DNA extraction or quantification. Inconsistencies between replicate analyses of the same sample strongly suggest laboratory issues rather than algorithmic problems.

Documentation review proves essential for identifying laboratory errors. Chain of custody records, technician notes, equipment logs, and quality control data can reveal procedural deviations or equipment malfunctions. Laboratories following proper protocols maintain detailed records that enable retrospective error analysis.

Recognizing Algorithmic Errors

Algorithmic errors manifest differently than laboratory mistakes. They tend to produce consistent, reproducible results that appear scientifically plausible but contain systematic biases or miscalculations. If the same sample analyzed multiple times yields identical results, yet those results conflict with other evidence or expectations, algorithmic error becomes more likely than laboratory contamination.

Version differences in software can also signal algorithmic issues. If updating analysis software changes interpretations of historical data, the algorithm itself may contain flaws rather than the original laboratory work being faulty. Discrepancies between different analysis programs processing the same raw data similarly point toward algorithmic rather than laboratory sources.

⚖️ Real-World Consequences of Identification Errors

Errors in DNA identification carry profound real-world consequences that extend far beyond abstract scientific concerns. Criminal convictions, paternity determinations, immigration cases, and mass disaster victim identification all rely on accurate DNA analysis.

False matches can lead to wrongful convictions, while false exclusions may allow guilty parties to escape justice. The Innocence Project has documented numerous cases where DNA evidence initially presented as conclusive later proved faulty upon reexamination, contributing to wrongful imprisonment.

Case Studies Highlighting Error Impact

Several high-profile cases illustrate how both laboratory and algorithmic errors can compromise justice. In 2017, the New York City medical examiner’s office acknowledged that its DNA analysis software, developed in-house, contained algorithmic flaws that affected thousands of cases over more than a decade. The software incorrectly calculated probabilities for complex DNA mixtures, potentially overstating the strength of matches.

Laboratory contamination errors have similarly impacted cases worldwide. The German “Phantom of Heilbronn” case involved DNA attributed to a female serial killer that appeared at numerous crime scenes across Europe. Eventually, investigators discovered the DNA belonged to a factory worker who packaged the cotton swabs used for evidence collection—a contamination error rather than evidence of criminal activity.

Quality Assurance and Error Prevention Strategies

Preventing DNA identification errors requires comprehensive quality assurance programs addressing both laboratory procedures and algorithmic processing. Leading forensic laboratories implement multiple overlapping safeguards to catch errors before they affect case outcomes.

Laboratory Quality Control Measures

Accreditation standards from organizations like the American Society of Crime Laboratory Directors provide frameworks for maintaining laboratory quality. These standards mandate regular proficiency testing, equipment validation, procedure documentation, and analyst certification.

Blind quality control samples, where technicians unknowingly process samples with known profiles mixed into routine casework, provide realistic assessments of laboratory performance. Regular audits by external reviewers add another layer of accountability.

Algorithmic Validation and Oversight

Algorithms used in forensic DNA analysis require rigorous validation before operational deployment. Validation studies test software performance across diverse sample types, including challenging scenarios like degraded DNA, mixtures, and samples with artifacts.

Open-source algorithms enable independent review by the scientific community, potentially identifying flaws more effectively than proprietary closed systems. However, many forensic software programs remain commercially protected, limiting external scrutiny and raising transparency concerns.

🔍 The Role of Expert Review and Testimony

Human experts remain essential for interpreting DNA results, particularly in complex cases or when errors are suspected. Expert witnesses must understand both laboratory procedures and algorithmic processing to provide meaningful testimony about result reliability.

Competent experts can identify red flags suggesting errors, explain uncertainty inherent in probabilistic analyses, and communicate technical concepts to non-specialist audiences. Unfortunately, some experts lack sufficient training in algorithmic methods, while others may have conflicts of interest that bias their interpretations.

Cross-Examination and Error Discovery

Adversarial legal proceedings provide mechanisms for uncovering DNA identification errors. Defense attorneys with access to forensic experts can challenge laboratory procedures, question algorithmic assumptions, and request raw data for independent reanalysis.

However, many defendants lack resources for effective expert consultation, creating inequities in error detection. Underfunded public defender offices may not challenge DNA evidence even when legitimate questions exist about its reliability.

Emerging Technologies and Future Error Landscapes

Rapid fire advancements in DNA sequencing technology and artificial intelligence are transforming identification capabilities while introducing new error possibilities. Next-generation sequencing provides vastly more genetic information than traditional STR profiling, but also generates massive datasets requiring sophisticated algorithmic interpretation.

Machine learning algorithms show promise for analyzing complex DNA mixtures and degraded samples that challenge conventional methods. These AI systems learn patterns from training data rather than following explicitly programmed rules. While potentially more accurate, they also introduce opacity—even developers may not fully understand how neural networks reach specific conclusions.

Addressing Bias in Algorithmic Systems

Growing awareness of algorithmic bias across various fields has prompted scrutiny of DNA analysis software. If training datasets underrepresent certain population groups, machine learning algorithms may perform poorly for those populations. Ensuring diverse, representative training data becomes critical for equitable error rates across different communities.

Transparency initiatives seek to make algorithmic decision-making processes more understandable and auditable. Explainable AI techniques aim to clarify how algorithms reach conclusions, enabling more meaningful human oversight and error detection.

💡 Practical Steps for Stakeholders

Different stakeholders in the DNA identification ecosystem can take specific actions to minimize errors and their consequences. Laboratories should prioritize comprehensive training, maintain rigorous quality standards, and foster cultures where technicians feel comfortable reporting potential errors without fear of retribution.

Software developers must implement thorough testing protocols, document algorithmic assumptions and limitations clearly, and respond promptly to bug reports or validation concerns. Regulatory bodies should establish minimum standards for algorithmic validation and require regular performance audits.

Legal professionals need education about DNA technology capabilities and limitations. Judges should scrutinize the admissibility of novel DNA analysis methods, while defense attorneys must have resources to challenge questionable evidence effectively.

Building a More Reliable Future

Navigating the complex landscape where laboratory errors and algorithmic errors intersect requires ongoing vigilance, transparency, and commitment to scientific rigor. Neither traditional laboratory quality control nor algorithmic validation alone suffices—comprehensive approaches addressing both domains are essential.

The forensic science community continues evolving standards and best practices as technology advances. Collaborative efforts between laboratory scientists, computer scientists, statisticians, legal professionals, and policymakers can strengthen DNA identification systems while remaining alert to emerging error sources.

Ultimately, acknowledging that errors can occur represents the first step toward minimizing their frequency and impact. DNA identification remains an extraordinarily powerful investigative tool, but only when practitioners approach it with appropriate humility about its limitations and potential pitfalls. By distinguishing between laboratory and algorithmic error sources, investigators can implement targeted solutions that enhance accuracy while maintaining the technology’s tremendous benefits for justice and truth-seeking. 🎯

Toni Santos is a biological systems researcher and forensic science communicator focused on structural analysis, molecular interpretation, and botanical evidence studies. His work investigates how plant materials, cellular formations, genetic variation, and toxin profiles contribute to scientific understanding across ecological and forensic contexts. With a multidisciplinary background in biological pattern recognition and conceptual forensic modeling, Toni translates complex mechanisms into accessible explanations that empower learners, researchers, and curious readers. His interests bridge structural biology, ecological observation, and molecular interpretation. As the creator of zantrixos.com, Toni explores: Botanical Forensic Science — the role of plant materials in scientific interpretation Cellular Structure Matching — the conceptual frameworks behind cellular comparison and classification DNA-Based Identification — an accessible view of molecular markers and structural variation Toxin Profiling Methods — understanding toxin behavior and classification through conceptual models Toni's work highlights the elegance and complexity of biological structures and invites readers to engage with science through curiosity, respect, and analytical thinking. Whether you're a student, researcher, or enthusiast, he encourages you to explore the details that shape biological evidence and inform scientific discovery.