Artificial intelligence is transforming how scientists identify, track, and interpret complex toxin patterns in biological and environmental data at unprecedented speed and accuracy.

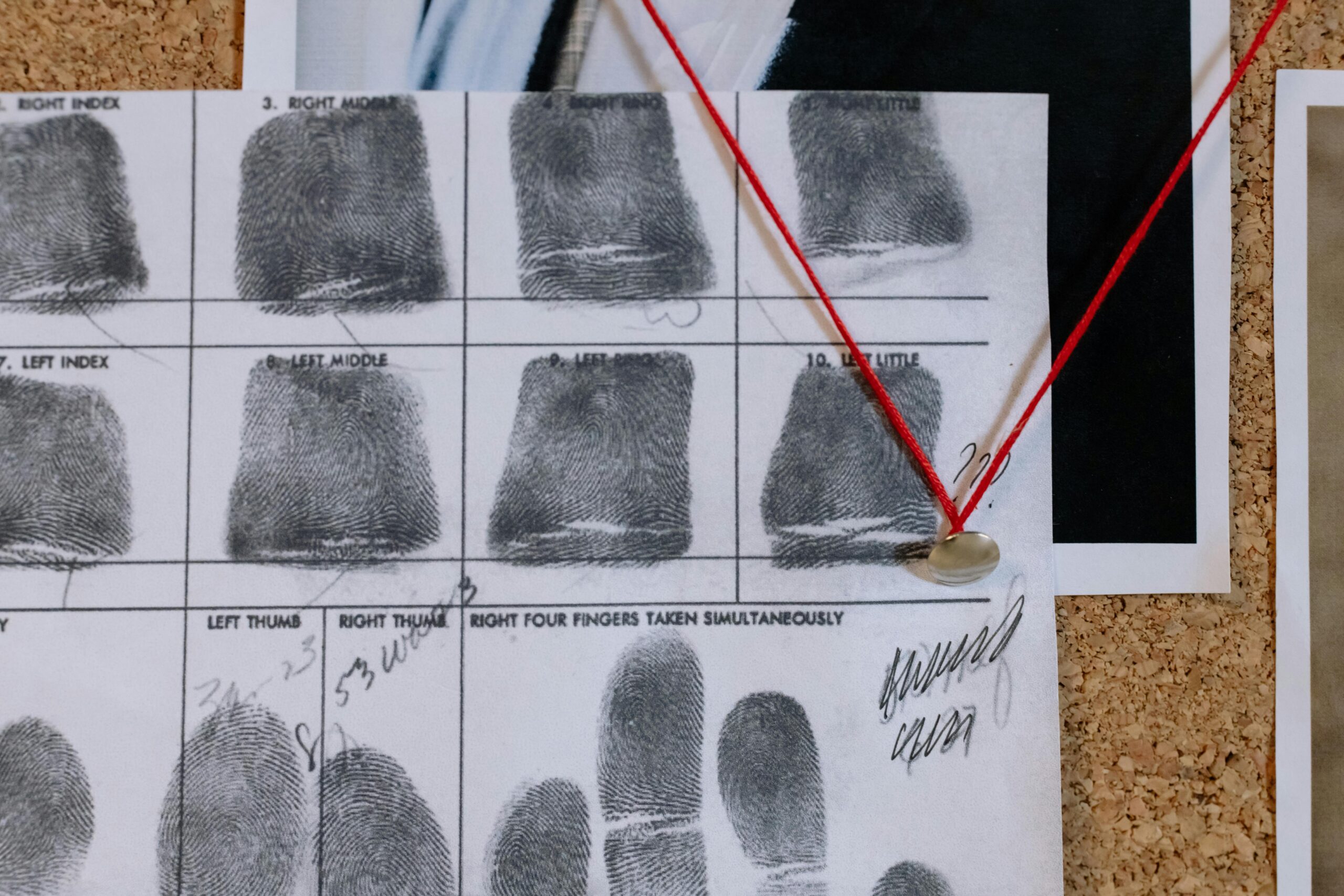

🔬 The Dawn of Intelligent Toxin Detection

Traditional toxin analysis has long been a laborious process requiring extensive manual review, specialized expertise, and significant time investment. Researchers would spend countless hours examining data sets, cross-referencing chemical signatures, and attempting to identify patterns that might indicate the presence of dangerous substances. This conventional approach, while effective in many cases, is increasingly unable to keep pace with the growing complexity and volume of toxicological data generated in modern research environments.

The integration of artificial intelligence into toxin data analysis represents a paradigm shift in how we approach this critical scientific challenge. Machine learning algorithms can now process massive datasets in minutes that would take human analysts weeks or months to evaluate. These advanced systems don’t just work faster—they identify patterns and correlations that might escape even the most experienced toxicologists, opening new frontiers in environmental monitoring, pharmaceutical development, and public health protection.

Understanding the Complexity of Toxin Data

Toxicological data exists in multiple dimensions, encompassing chemical structures, biological responses, environmental conditions, and temporal factors. Each toxin may produce different effects depending on dosage, exposure duration, individual susceptibility, and numerous other variables. This multifaceted nature creates datasets of extraordinary complexity that challenge traditional analytical methods.

Modern toxicology generates data from diverse sources including mass spectrometry, genomic sequencing, cellular assays, animal studies, and epidemiological investigations. Each data stream provides valuable insights, but integrating these disparate information sources into a coherent understanding has historically proven difficult. The heterogeneous nature of toxicological information demands analytical approaches capable of handling structured and unstructured data simultaneously.

The Data Volume Challenge

Environmental monitoring programs alone generate terabytes of chemical detection data annually. When combined with clinical toxicology records, pharmaceutical safety databases, and research studies, the information volume becomes staggering. This data deluge presents both opportunity and obstacle—while more data theoretically enables better insights, extracting meaningful patterns becomes exponentially more difficult as datasets grow.

🤖 How AI Transforms Pattern Recognition in Toxicology

Artificial intelligence excels at identifying subtle patterns within complex, high-dimensional datasets. Machine learning algorithms can detect correlations between seemingly unrelated variables, revealing toxicological relationships that might remain hidden to human observers. These systems learn from examples, continuously improving their pattern recognition capabilities as they process more data.

Deep learning networks, inspired by the structure of biological neural systems, prove particularly effective for toxin identification tasks. Convolutional neural networks can analyze spectroscopic data with remarkable precision, identifying chemical signatures indicative of specific toxins. Recurrent neural networks excel at processing time-series data, tracking how toxin concentrations change over time and predicting future trends based on historical patterns.

Real-Time Analysis Capabilities

One of AI’s most transformative contributions to toxin analysis is the ability to process data in real-time. Environmental sensors connected to AI-powered analytical systems can now detect emerging contamination events as they occur, triggering immediate alerts and enabling rapid response. This capability represents a fundamental improvement over traditional batch processing approaches that introduced significant delays between data collection and analysis.

In clinical settings, AI systems can analyze patient symptoms, biomarker data, and exposure histories to rapidly identify potential toxin involvement in acute poisoning cases. This accelerated diagnosis can be lifesaving, enabling clinicians to initiate appropriate treatment protocols hours or even days earlier than conventional diagnostic approaches would permit.

Advanced Technologies Powering Toxin Analysis

Natural language processing algorithms mine scientific literature, extracting toxicological knowledge from millions of research papers, case reports, and regulatory documents. These systems create comprehensive knowledge graphs that map relationships between chemicals, biological systems, and toxic effects. Researchers can query these AI-generated knowledge bases to quickly access relevant information that might otherwise remain buried in the vast scientific literature.

Computer vision techniques analyze microscopic images from cellular toxicity assays, identifying subtle morphological changes that indicate toxic stress. These systems detect patterns invisible to human observers, quantifying cellular responses with unprecedented precision. The combination of high-content imaging and AI analysis enables screening thousands of chemical compounds for potential toxicity far more efficiently than traditional methods.

Predictive Modeling and Risk Assessment

AI-powered predictive models estimate toxicity of novel compounds before synthesis or testing, potentially identifying hazardous substances early in the development process. These in silico approaches leverage structural information and known structure-activity relationships to predict biological effects. While not replacing experimental validation, predictive toxicology dramatically reduces the time, cost, and animal testing required for safety assessment.

Quantitative structure-activity relationship (QSAR) models have existed for decades, but modern machine learning approaches significantly enhance their accuracy and applicability. Deep learning models can learn complex, non-linear relationships between chemical structure and biological activity that traditional statistical methods cannot capture. These advanced models provide more reliable predictions across broader chemical space.

🌍 Environmental Monitoring Revolution

Environmental toxicology benefits enormously from AI-enhanced pattern finding technology. Water quality monitoring systems equipped with AI analytics can detect emerging contaminants, track pollution sources, and predict contamination spread. These capabilities enable environmental agencies to respond more effectively to pollution incidents and implement preventive measures before widespread exposure occurs.

Agricultural applications include monitoring pesticide residues in food supplies, tracking environmental persistence of agricultural chemicals, and assessing cumulative exposure risks. AI systems integrate data from multiple monitoring points, weather patterns, agricultural practices, and hydrological models to create comprehensive risk assessments that inform regulatory decisions and farming practices.

Air Quality and Atmospheric Toxins

Urban air quality monitoring networks generate continuous streams of data on particulate matter, volatile organic compounds, and other airborne toxins. AI algorithms identify pollution sources, predict air quality trends, and issue health advisories. These systems account for complex factors including traffic patterns, industrial activity, weather conditions, and seasonal variations to provide accurate, localized air quality forecasts.

Industrial facilities utilize AI-powered monitoring to ensure emissions compliance and detect equipment malfunctions that might release toxic substances. Predictive maintenance algorithms identify potential problems before they result in environmental releases, protecting both public health and corporate interests.

Pharmaceutical Development and Drug Safety

The pharmaceutical industry employs AI-enhanced toxin analysis throughout the drug development pipeline. Early-stage screening identifies compounds with favorable therapeutic profiles and acceptable safety margins. This intelligent filtering focuses research resources on the most promising candidates, accelerating development timelines and reducing costs.

Post-market surveillance systems analyze adverse event reports, electronic health records, and social media to detect emerging safety signals. AI algorithms identify unexpected patterns of adverse reactions that might indicate previously unrecognized toxicities. This continuous monitoring enhances patient safety by enabling rapid response to emerging safety concerns.

Precision Toxicology and Personalized Risk Assessment

Advances in genomics and personalized medicine enable AI systems to assess individual susceptibility to toxic substances. Genetic variations affecting drug metabolism, detoxification capacity, and target sensitivity create significant inter-individual differences in toxic response. AI models integrating genetic, physiological, and environmental data provide personalized risk assessments that support individualized exposure guidelines and treatment decisions.

Pharmacogenomic data combined with AI analytics helps clinicians predict which patients face elevated risks from specific medications. This information guides prescribing decisions, optimizing therapeutic benefit while minimizing adverse effects. As genetic testing becomes more accessible, personalized toxicological risk assessment will become standard practice.

⚡ Technical Infrastructure and Implementation

Implementing AI-powered toxin analysis systems requires robust technical infrastructure. Cloud computing platforms provide the computational resources necessary for training complex models and processing large datasets. Distributed computing architectures enable real-time analysis of streaming data from multiple sources simultaneously.

Data management systems must handle diverse data types including structured databases, unstructured text, images, and sensor streams. Data integration pipelines transform heterogeneous inputs into standardized formats suitable for machine learning algorithms. Quality control procedures ensure data accuracy and reliability, critical factors for generating trustworthy analytical results.

Model Development and Validation

Developing effective AI models for toxin analysis demands domain expertise combined with data science skills. Toxicologists work alongside machine learning engineers to design systems that address real-world analytical challenges. Feature engineering—selecting which variables to include in models—requires deep understanding of toxicological mechanisms and data characteristics.

Rigorous validation procedures ensure model reliability before deployment. Models are tested against independent datasets not used during training, assessing their ability to generalize to new situations. Performance metrics appropriate for toxicological applications guide model selection and optimization. Transparency and interpretability receive increasing emphasis, as users need to understand why models make particular predictions.

🎯 Overcoming Implementation Challenges

Despite tremendous potential, implementing AI in toxicology faces several obstacles. Data quality and availability represent significant challenges. Machine learning models require large, high-quality training datasets, but toxicological data often exists in fragmented, proprietary databases. Initiatives promoting data sharing and standardization help address this limitation, but progress remains gradual.

Regulatory acceptance of AI-generated evidence presents another hurdle. Regulatory agencies traditionally rely on standardized testing protocols and human expert review. Incorporating AI analytics into regulatory decision-making requires demonstrating reliability, reproducibility, and scientific validity. Guidelines for validating AI-based toxicological assessments are emerging but remain incomplete.

Ethical Considerations and Bias

AI systems can perpetuate or amplify biases present in training data. If toxicological datasets disproportionately represent certain populations or exposure scenarios, models may perform poorly for underrepresented groups. Ensuring fairness and equity in AI-powered toxicology requires careful attention to dataset composition and model validation across diverse populations.

Privacy concerns arise when AI systems analyze personal health data or environmental exposures linked to specific individuals or communities. Implementing appropriate data protection measures while enabling beneficial research represents a delicate balance. Ethical frameworks guiding AI development in toxicology continue evolving as technology capabilities expand.

The Future Landscape of AI-Enhanced Toxicology

Looking forward, AI will become increasingly integrated into all aspects of toxicological science. Autonomous laboratories equipped with robotic systems and AI control algorithms will conduct high-throughput toxicity testing with minimal human intervention. These systems will design experiments, execute protocols, analyze results, and iteratively refine hypotheses, dramatically accelerating research productivity.

Digital twins—computational models simulating individual organisms or ecosystems—will enable in silico toxicity testing that reduces or eliminates animal use. These sophisticated models, powered by AI and mechanistic biological knowledge, will predict toxic responses across species and exposure scenarios. While complete replacement of experimental testing remains distant, digital approaches will increasingly complement and reduce traditional testing requirements.

Integration with Internet of Things

Widespread deployment of environmental sensors, wearable devices, and smart home technology creates opportunities for continuous, personalized exposure monitoring. AI systems will integrate data from these diverse sources, building comprehensive pictures of individual and population-level exposures. This information will enable proactive risk management and early intervention before health effects manifest.

Smart cities equipped with comprehensive sensor networks and AI analytics will monitor environmental quality in real-time, optimizing traffic flows, industrial operations, and urban planning to minimize population exposures to toxic substances. These integrated systems represent a vision of technology-enabled public health protection operating at unprecedented scale and sophistication.

💡 Collaborative Intelligence: Humans and AI Working Together

The future of toxicology isn’t about AI replacing human experts, but rather about collaborative intelligence combining human insight with computational power. AI excels at processing vast datasets and identifying patterns, while humans provide contextual understanding, creative hypothesis generation, and ethical judgment. Effective systems leverage the strengths of both.

Decision support tools present AI-generated insights to toxicologists in intuitive formats that facilitate interpretation and decision-making. Visualization techniques help humans understand complex patterns identified by algorithms. Interactive systems allow experts to query AI models, exploring how different factors influence predictions and building confidence in computational recommendations.

Training the next generation of toxicologists requires integrating data science, AI literacy, and traditional toxicological knowledge. Educational programs increasingly emphasize computational skills alongside laboratory techniques. This interdisciplinary preparation ensures future professionals can effectively leverage AI tools while maintaining critical evaluation skills necessary for responsible application of technology.

🚀 Transformative Impact on Public Health

The ultimate goal of AI-enhanced toxin analysis is protecting and improving public health. Earlier detection of environmental hazards, more accurate risk assessments, safer pharmaceuticals, and personalized exposure guidelines all contribute to this mission. The technology enables proactive rather than reactive approaches to toxicological threats, preventing harm before exposure occurs.

Global health initiatives benefit from AI-powered toxicology, particularly in resource-limited settings where traditional analytical capabilities may be unavailable. Cloud-based AI tools democratize access to sophisticated analytical capabilities, supporting environmental monitoring and public health protection worldwide. These technologies help address environmental justice concerns by enabling comprehensive monitoring in underserved communities.

As climate change alters environmental conditions, new toxicological challenges emerge including changing disease vectors, altered chemical behaviors, and novel exposure scenarios. AI systems capable of identifying unexpected patterns and adapting to new conditions will prove essential for addressing these evolving threats. The flexibility and learning capacity of artificial intelligence make it uniquely suited for navigating uncertain futures.

The revolution in toxin data analysis powered by artificial intelligence represents more than technological advancement—it embodies a fundamental transformation in how we understand, predict, and manage toxic risks. By unleashing the pattern-finding capabilities of advanced AI systems, we gain unprecedented insight into the complex relationships between chemical exposures and biological effects. This knowledge empowers us to make more informed decisions, develop safer products, protect vulnerable populations, and create healthier environments for all. The journey has just begun, and the potential for positive impact continues expanding as technology capabilities grow and implementation challenges are overcome.

Toni Santos is a biological systems researcher and forensic science communicator focused on structural analysis, molecular interpretation, and botanical evidence studies. His work investigates how plant materials, cellular formations, genetic variation, and toxin profiles contribute to scientific understanding across ecological and forensic contexts. With a multidisciplinary background in biological pattern recognition and conceptual forensic modeling, Toni translates complex mechanisms into accessible explanations that empower learners, researchers, and curious readers. His interests bridge structural biology, ecological observation, and molecular interpretation. As the creator of zantrixos.com, Toni explores: Botanical Forensic Science — the role of plant materials in scientific interpretation Cellular Structure Matching — the conceptual frameworks behind cellular comparison and classification DNA-Based Identification — an accessible view of molecular markers and structural variation Toxin Profiling Methods — understanding toxin behavior and classification through conceptual models Toni's work highlights the elegance and complexity of biological structures and invites readers to engage with science through curiosity, respect, and analytical thinking. Whether you're a student, researcher, or enthusiast, he encourages you to explore the details that shape biological evidence and inform scientific discovery.