Building annotated datasets is the cornerstone of precision cell matching research, enabling breakthroughs in regenerative medicine, immunotherapy, and personalized healthcare through accurate computational models.

🔬 The Foundation of Cell Matching Research

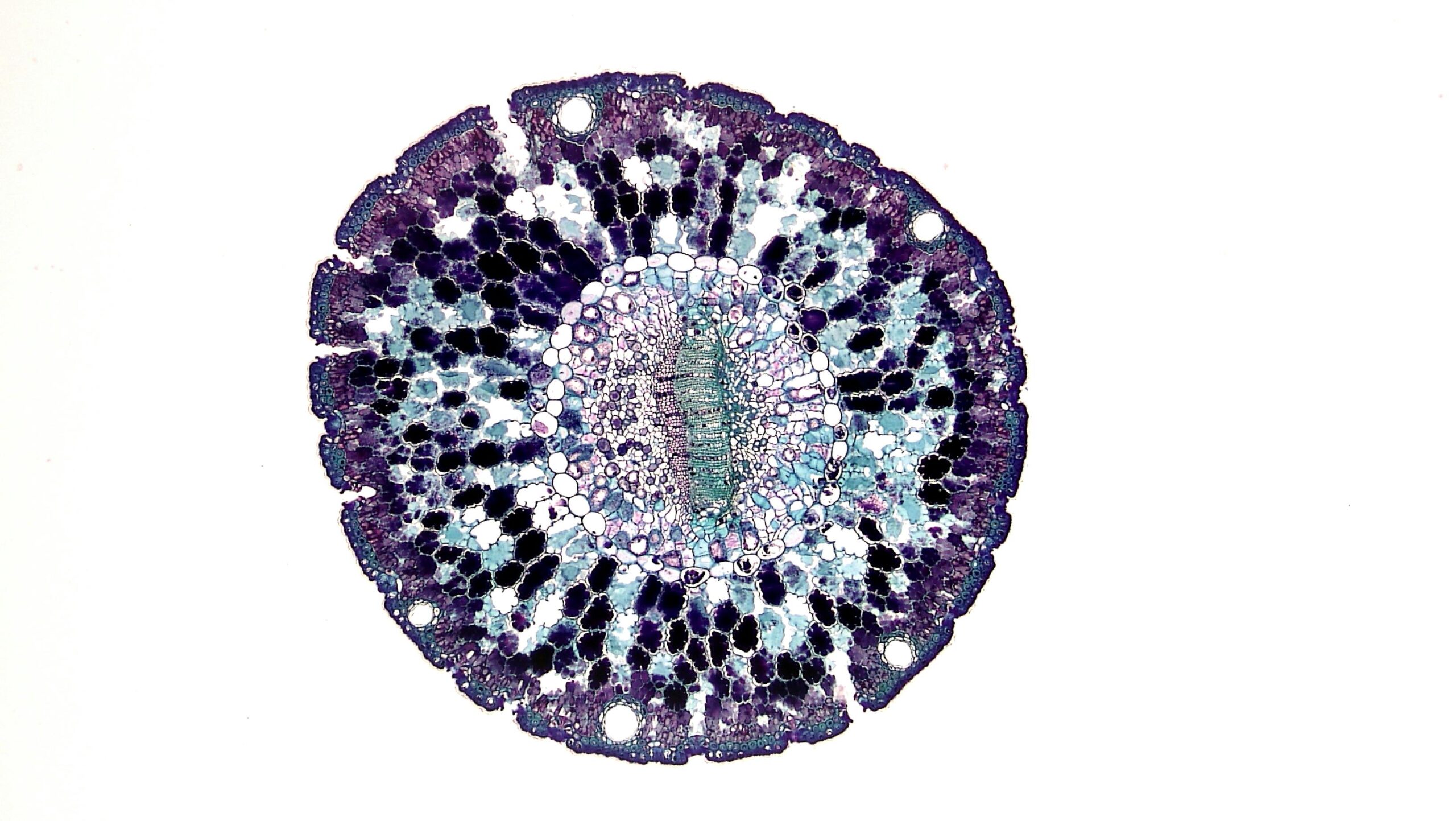

Precision cell matching has emerged as a critical discipline in modern biomedical research, where scientists match cells based on molecular signatures, morphological characteristics, and functional properties. The success of these matching algorithms depends entirely on the quality and comprehensiveness of annotated datasets used during model training and validation.

Annotated datasets serve as the ground truth that machine learning algorithms learn from, identifying patterns and relationships between cellular characteristics. Without properly annotated data, even the most sophisticated algorithms will produce unreliable results that could compromise research outcomes or clinical applications.

The complexity of cellular biology requires annotation strategies that capture multiple dimensions of cell identity—from surface markers and gene expression profiles to spatial organization and temporal dynamics. This multidimensional approach ensures that matching algorithms can distinguish between subtle cell type variations that might be critical for therapeutic applications.

Understanding the Cell Matching Landscape 🧬

Cell matching research spans multiple domains, each with unique annotation requirements. Immune cell matching for transplantation requires different annotation strategies than cancer cell identification or stem cell characterization. Understanding these domain-specific needs is essential before beginning dataset construction.

In immunotherapy research, precise matching of T-cell receptors to target antigens demands detailed annotations of binding affinities, activation states, and functional outcomes. Stem cell research requires annotations that track differentiation potential and lineage commitment. Each application demands tailored annotation protocols that capture relevant biological information.

The emergence of single-cell technologies has revolutionized our ability to profile individual cells at unprecedented resolution. These technologies generate massive datasets that require sophisticated annotation frameworks capable of handling high-dimensional data while maintaining biological interpretability.

Key Components of Cell Matching Datasets

Successful annotated datasets for cell matching incorporate several critical components. First, they must include comprehensive metadata describing experimental conditions, sample sources, and processing protocols. This contextual information enables researchers to account for batch effects and technical variations that might confuse matching algorithms.

Second, annotations must be hierarchical, capturing cell identity at multiple levels of biological organization—from broad cell types down to specific functional states. This hierarchical structure allows algorithms to learn relationships between different levels of cellular organization.

Third, quality control annotations are essential, flagging low-quality cells, doublets, or technical artifacts that should be excluded from training data. These quality metrics ensure that algorithms learn from genuine biological signals rather than technical noise.

Designing Your Annotation Strategy 📋

Before collecting a single data point, researchers must develop a comprehensive annotation strategy that aligns with their research objectives. This strategy should define what cellular features will be annotated, the annotation schema to be used, and the level of detail required for each annotation type.

The annotation schema should balance biological accuracy with practical feasibility. Overly complex schemas may be scientifically ideal but impractical to implement consistently across large datasets. Conversely, oversimplified schemas may miss critical distinctions that affect matching accuracy.

Consider creating a standardized annotation guide that defines each cell type, state, or feature being annotated. This guide should include visual examples, decision trees for ambiguous cases, and clear exclusion criteria. Such documentation ensures consistency when multiple annotators contribute to the dataset.

Selecting Appropriate Cell Populations

Dataset composition significantly impacts algorithm performance. Representative datasets should include sufficient examples of each cell type or state of interest, with particular attention to rare populations that might be underrepresented in random sampling.

Balanced datasets prevent algorithms from developing biases toward abundant cell types while ignoring rare but important populations. Strategic oversampling of rare cell types during dataset construction helps address this challenge without compromising the biological diversity of the training data.

Include both typical and edge cases in your dataset. While clear examples help algorithms learn core patterns, ambiguous cases at the boundaries between cell types improve robustness and generalization to real-world scenarios where cells don’t always fit neatly into predefined categories.

Technology Platforms for Data Collection 🔧

Modern cell matching datasets integrate information from multiple technology platforms, each providing complementary views of cell identity. Flow cytometry provides quantitative protein expression data, single-cell RNA sequencing reveals transcriptional states, and imaging technologies capture morphological and spatial information.

Single-cell RNA sequencing has become the gold standard for comprehensive cell profiling, capturing thousands of genes simultaneously for individual cells. These high-dimensional datasets require sophisticated annotation approaches that map gene expression patterns to known cell types while remaining open to discovering novel populations.

Mass cytometry (CyTOF) enables simultaneous measurement of dozens of protein markers, providing high-resolution immunophenotyping data. The increased dimensionality compared to traditional flow cytometry allows more precise cell type discrimination but requires careful panel design and quality control to ensure reliable annotations.

Integrating Multi-Modal Data

The most powerful annotated datasets integrate information across multiple modalities, linking transcriptional profiles with protein expression, morphology, and functional assays. Multi-modal integration provides a more complete picture of cell identity than any single technology alone.

However, multi-modal integration introduces technical challenges. Different technologies have different sources of noise, batch effects, and measurement scales. Annotation protocols must account for these technical differences while preserving biological signal across modalities.

Recent technologies like CITE-seq simultaneously measure RNA and protein in the same cells, simplifying multi-modal integration. These paired measurements enable direct comparison between transcriptional and proteomic cell identities, revealing post-transcriptional regulation that might affect cell matching accuracy.

The Annotation Process: From Raw Data to Ground Truth 🎯

Converting raw cellular measurements into annotated ground truth requires systematic workflows that combine automated preprocessing with expert biological interpretation. Initial data processing steps—quality filtering, normalization, and dimensionality reduction—prepare data for annotation while removing technical artifacts.

Clustering algorithms provide an initial organization of cells into putative groups based on similarity. However, these computational clusters don’t automatically correspond to biological cell types. Expert annotators must evaluate each cluster, assigning biological identities based on marker expression, literature knowledge, and experimental context.

The annotation process should be iterative, with initial annotations refined through successive rounds of review and validation. This iterative approach allows annotators to develop increasingly sophisticated understanding of the dataset while identifying and correcting early annotation errors.

Ensuring Annotation Consistency

Annotation consistency is critical when multiple experts contribute to dataset creation. Inter-annotator variability can introduce noise that degrades algorithm performance. Regular calibration sessions where annotators review and discuss challenging cases help maintain consistency across the annotation team.

Quantitative metrics can assess annotation agreement, identifying areas where annotators disagree and require additional discussion or clearer guidelines. Calculate inter-rater reliability scores periodically throughout the annotation process to monitor and maintain quality.

Consider implementing a hierarchical annotation workflow where junior annotators handle straightforward cases while senior experts review ambiguous cells and resolve disagreements. This approach balances efficiency with quality while providing training opportunities for new annotators.

Validation and Quality Control Measures ✅

Rigorous validation distinguishes high-quality annotated datasets from collections of arbitrary labels. Multiple validation strategies should be employed, combining computational checks with biological validation to ensure annotation accuracy and consistency.

Hold-out validation sets provide independent assessment of annotation quality. Reserve a subset of cells from the annotation process, then have independent experts annotate these cells without knowledge of the original annotations. Agreement between independent annotations indicates reliable ground truth.

Biological validation through orthogonal technologies confirms computational annotations. For example, if single-cell RNA sequencing suggests a particular cell type, validate this annotation through protein expression, morphology, or functional assays that provide independent confirmation of cell identity.

Addressing Annotation Errors and Uncertainties

No annotation process is perfect, and acknowledging uncertainty is more scientifically honest than forcing definitive labels on ambiguous cells. Consider implementing confidence scores that indicate annotation certainty, allowing downstream algorithms to weight training examples appropriately.

Systematic error analysis helps identify patterns in annotation mistakes. Are certain cell types consistently misannotated? Do specific technical conditions correlate with annotation errors? Understanding error patterns guides refinement of annotation protocols and algorithms.

Establish clear procedures for correcting errors discovered after initial annotation. Maintain version control for datasets, documenting changes between versions so researchers using the data understand how annotations evolved over time.

Standardization and Metadata Requirements 📊

Standardized annotation vocabularies enable cross-dataset comparisons and facilitate data sharing within the research community. Ontologies like the Cell Ontology provide controlled vocabularies that ensure different researchers describe the same cell types consistently.

Comprehensive metadata documentation is as important as the annotations themselves. Record experimental protocols, instrument settings, reagent information, sample demographics, and processing parameters. This metadata enables researchers to understand dataset context and assess applicability to their specific research questions.

Follow community standards like the Minimum Information About a Single-cell Experiment (MIASE) when documenting datasets. Adherence to established standards improves dataset reusability and facilitates integration with public databases and analysis platforms.

Building Dataset Documentation

Excellent documentation transforms annotated datasets from personal resources into community assets. Create comprehensive documentation that describes dataset contents, annotation methodology, known limitations, and suggested use cases.

Include example code demonstrating how to load and work with the dataset in common analysis environments. This practical documentation lowers barriers to dataset adoption and ensures researchers can effectively utilize the resource you’ve created.

Publish dataset descriptions in peer-reviewed journals or data journals, providing citable references that acknowledge the effort invested in dataset creation while improving discoverability through scientific literature searches.

Computational Tools for Dataset Construction 💻

Numerous software tools facilitate annotated dataset construction for cell matching research. Scanpy and Seurat provide comprehensive environments for single-cell data analysis, including visualization and clustering tools that support annotation workflows.

Specialized annotation platforms like Cellenics, Loupe Browser, and CellTypist streamline the annotation process with intuitive interfaces designed specifically for cell type labeling. These platforms often include reference databases that suggest cell type identities based on marker expression patterns.

Machine learning-assisted annotation tools leverage existing annotated datasets to suggest labels for new data, accelerating the annotation process while maintaining human oversight for quality control. Transfer learning approaches apply knowledge from well-annotated reference datasets to new experimental data.

Scaling Annotation Efforts for Large Datasets 📈

Modern single-cell experiments routinely generate datasets containing millions of cells, creating annotation challenges that exceed manual capacity. Scaling annotation efforts requires combining automated methods with strategic human oversight focused on quality control and difficult cases.

Active learning strategies identify the most informative cells for manual annotation, maximizing information gain from limited expert time. By focusing annotation effort on cells that resolve maximum uncertainty, active learning achieves high-quality results with fewer manually annotated examples.

Crowdsourcing approaches distribute annotation tasks across many contributors, enabling rapid annotation of large datasets. However, crowdsourcing requires careful quality control, clear task design, and aggregation methods that synthesize multiple potentially conflicting annotations into consensus labels.

Ethical Considerations and Data Privacy 🔒

Annotated datasets derived from human samples raise important ethical considerations regarding consent, privacy, and data sharing. Ensure appropriate informed consent covers dataset creation and sharing, particularly for sensitive applications like disease research or genetic studies.

De-identification procedures must balance privacy protection with research utility. Remove or encrypt personally identifiable information while preserving biological metadata necessary for dataset interpretation. Follow applicable regulations like HIPAA or GDPR depending on data origin and intended distribution.

Consider differential privacy approaches that add controlled noise to datasets, protecting individual privacy while preserving population-level patterns useful for algorithm training. These mathematical techniques provide quantifiable privacy guarantees increasingly required by data protection regulations.

Sharing and Disseminating Your Dataset 🌐

Public data sharing multiplies the impact of annotated datasets, enabling reproducibility, method comparison, and accelerated discovery across the research community. Deposit datasets in established repositories like the Gene Expression Omnibus, Single Cell Portal, or CellxGene to ensure long-term accessibility.

Choose repositories that provide persistent identifiers, version control, and comprehensive metadata support. These features ensure your dataset remains discoverable and interpretable long after publication, maximizing scientific impact.

Consider licensing implications when sharing datasets. Open licenses like Creative Commons facilitate reuse while ensuring appropriate attribution. Clearly specify any use restrictions, particularly for datasets containing sensitive human data that may have consent-based limitations.

The Future of Annotated Cell Datasets 🚀

Emerging technologies continue to expand what can be measured at single-cell resolution, from spatial transcriptomics capturing tissue context to lineage tracing revealing developmental relationships. Future annotated datasets will integrate these additional dimensions, creating increasingly comprehensive representations of cell identity.

Artificial intelligence advances are transforming annotation from primarily manual to increasingly automated processes. Foundation models trained on massive cell datasets learn general representations of cell identity that transfer across contexts, potentially revolutionizing how we approach cell matching and classification.

Standardization efforts are maturing, with community-wide initiatives developing common data formats, annotation ontologies, and quality metrics. These standards will facilitate dataset integration across studies and institutions, creating meta-datasets of unprecedented scale and diversity.

The convergence of high-throughput experimentation, standardized annotation frameworks, and advanced computational methods positions cell matching research for transformative breakthroughs. Well-constructed annotated datasets are the essential foundation enabling these advances, translating biological complexity into computational formats that unlock precision medicine’s full potential.

Investing time and resources in building high-quality annotated datasets pays compound returns through improved algorithm performance, enhanced reproducibility, and accelerated scientific discovery. As cell-based therapies and precision diagnostics move from research to clinical practice, the quality of underlying training data will directly impact patient outcomes, making dataset construction not just a scientific endeavor but a clinical imperative.

Toni Santos is a biological systems researcher and forensic science communicator focused on structural analysis, molecular interpretation, and botanical evidence studies. His work investigates how plant materials, cellular formations, genetic variation, and toxin profiles contribute to scientific understanding across ecological and forensic contexts. With a multidisciplinary background in biological pattern recognition and conceptual forensic modeling, Toni translates complex mechanisms into accessible explanations that empower learners, researchers, and curious readers. His interests bridge structural biology, ecological observation, and molecular interpretation. As the creator of zantrixos.com, Toni explores: Botanical Forensic Science — the role of plant materials in scientific interpretation Cellular Structure Matching — the conceptual frameworks behind cellular comparison and classification DNA-Based Identification — an accessible view of molecular markers and structural variation Toxin Profiling Methods — understanding toxin behavior and classification through conceptual models Toni's work highlights the elegance and complexity of biological structures and invites readers to engage with science through curiosity, respect, and analytical thinking. Whether you're a student, researcher, or enthusiast, he encourages you to explore the details that shape biological evidence and inform scientific discovery.